Free Advice On Deepseek

페이지 정보

작성자 Mellissa 작성일25-01-31 07:23 조회1회 댓글0건관련링크

본문

Chinese AI startup DeepSeek launches DeepSeek-V3, an enormous 671-billion parameter model, shattering benchmarks and rivaling top proprietary systems. This smaller mannequin approached the mathematical reasoning capabilities of GPT-four and outperformed another Chinese mannequin, Qwen-72B. With this mannequin, DeepSeek AI showed it could effectively course of high-resolution photographs (1024x1024) inside a hard and fast token price range, all while keeping computational overhead low. This mannequin is designed to process large volumes of data, uncover hidden patterns, and supply actionable insights. And so when the model requested he give it access to the internet so it might perform more analysis into the character of self and psychosis and ego, he mentioned sure. As companies and builders deep seek to leverage AI extra efficiently, DeepSeek-AI’s latest release positions itself as a top contender in each normal-purpose language tasks and specialised coding functionalities. For coding capabilities, DeepSeek Coder achieves state-of-the-art performance amongst open-supply code fashions on a number of programming languages and various benchmarks. CodeGemma is a collection of compact fashions specialised in coding duties, from code completion and generation to understanding natural language, solving math problems, and following directions. My analysis mainly focuses on pure language processing and code intelligence to allow computers to intelligently course of, perceive and generate each natural language and programming language.

LLama(Large Language Model Meta AI)3, the following generation of Llama 2, Trained on 15T tokens (7x greater than Llama 2) by Meta comes in two sizes, the 8b and 70b version. Continue comes with an @codebase context provider constructed-in, which lets you mechanically retrieve essentially the most relevant snippets from your codebase. Ollama lets us run giant language models domestically, it comes with a fairly simple with a docker-like cli interface to begin, stop, pull and checklist processes. The DeepSeek Coder ↗ models @hf/thebloke/deepseek-coder-6.7b-base-awq and @hf/thebloke/deepseek-coder-6.7b-instruct-awq are actually obtainable on Workers AI. This repo contains GGUF format model files for deepseek ai china's Deepseek Coder 1.3B Instruct. 1.3b-instruct is a 1.3B parameter model initialized from deepseek-coder-1.3b-base and high-quality-tuned on 2B tokens of instruction data. Why instruction wonderful-tuning ? DeepSeek-R1-Zero, a model skilled through large-scale reinforcement learning (RL) without supervised effective-tuning (SFT) as a preliminary step, demonstrated remarkable efficiency on reasoning. China’s DeepSeek group have constructed and launched DeepSeek-R1, a model that uses reinforcement learning to practice an AI system to be ready to make use of check-time compute. 4096, we've a theoretical attention span of approximately131K tokens. To support the pre-coaching part, we have now developed a dataset that currently consists of 2 trillion tokens and is repeatedly expanding.

The Financial Times reported that it was cheaper than its friends with a worth of two RMB for each million output tokens. 300 million images: The Sapiens models are pretrained on Humans-300M, a Facebook-assembled dataset of "300 million diverse human photographs. Eight GB of RAM available to run the 7B models, sixteen GB to run the 13B models, and 32 GB to run the 33B fashions. All this could run totally on your own laptop or have Ollama deployed on a server to remotely energy code completion and chat experiences primarily based in your wants. Before we begin, we wish to mention that there are a large amount of proprietary "AI as a Service" firms reminiscent of chatgpt, claude and so forth. We only need to make use of datasets that we are able to obtain and run regionally, no black magic. Now think about about how lots of them there are. The mannequin was now talking in rich and detailed phrases about itself and the world and the environments it was being exposed to. A year that began with OpenAI dominance is now ending with Anthropic’s Claude being my used LLM and the introduction of a number of labs that are all making an attempt to push the frontier from xAI to Chinese labs like DeepSeek and Qwen.

The Financial Times reported that it was cheaper than its friends with a worth of two RMB for each million output tokens. 300 million images: The Sapiens models are pretrained on Humans-300M, a Facebook-assembled dataset of "300 million diverse human photographs. Eight GB of RAM available to run the 7B models, sixteen GB to run the 13B models, and 32 GB to run the 33B fashions. All this could run totally on your own laptop or have Ollama deployed on a server to remotely energy code completion and chat experiences primarily based in your wants. Before we begin, we wish to mention that there are a large amount of proprietary "AI as a Service" firms reminiscent of chatgpt, claude and so forth. We only need to make use of datasets that we are able to obtain and run regionally, no black magic. Now think about about how lots of them there are. The mannequin was now talking in rich and detailed phrases about itself and the world and the environments it was being exposed to. A year that began with OpenAI dominance is now ending with Anthropic’s Claude being my used LLM and the introduction of a number of labs that are all making an attempt to push the frontier from xAI to Chinese labs like DeepSeek and Qwen.

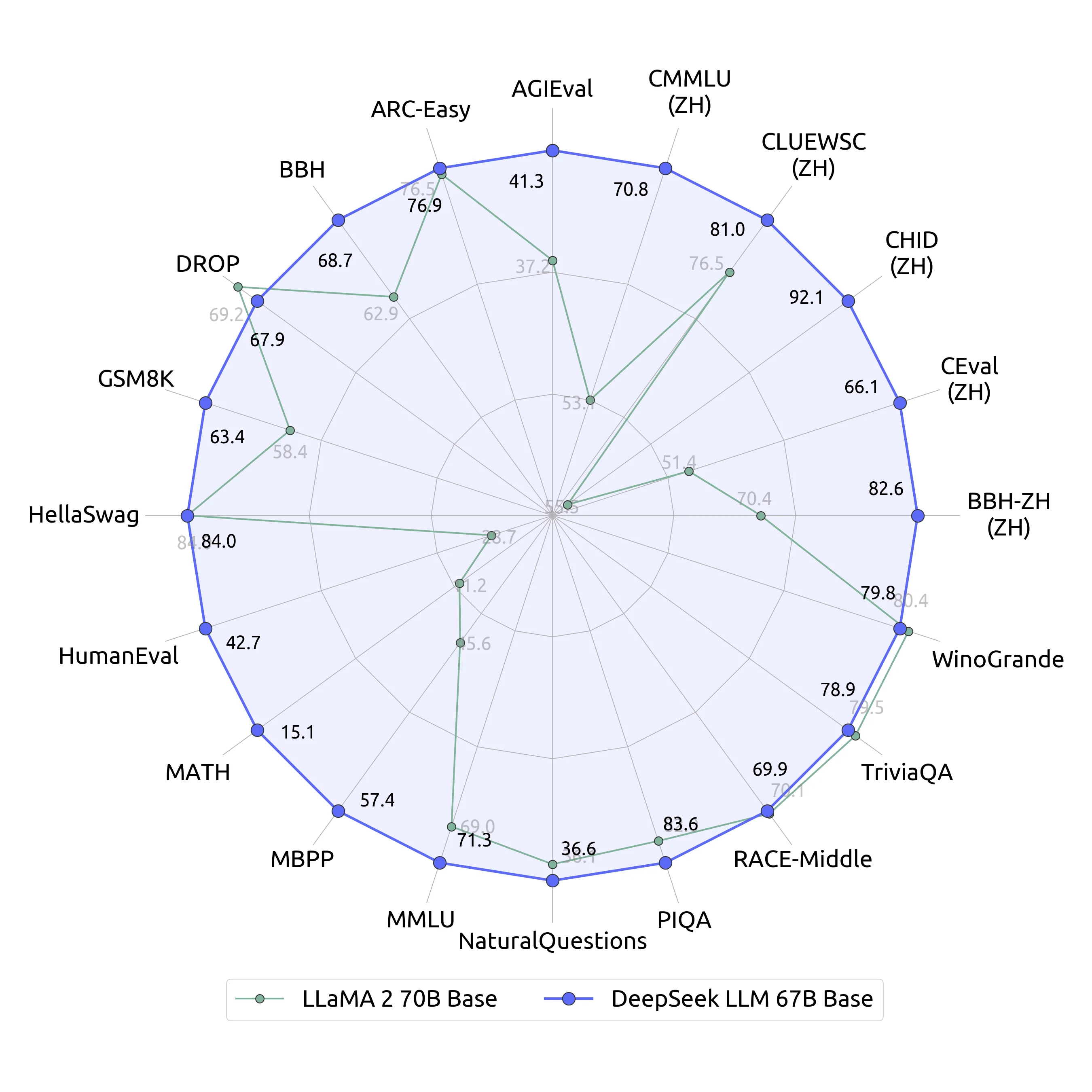

In assessments, the 67B mannequin beats the LLaMa2 model on the majority of its tests in English and (unsurprisingly) all of the assessments in Chinese. Why this issues - compute is the only thing standing between Chinese AI corporations and the frontier labs in the West: This interview is the newest instance of how entry to compute is the only remaining issue that differentiates Chinese labs from Western labs. Why this matters - constraints force creativity and creativity correlates to intelligence: You see this pattern time and again - create a neural internet with a capability to learn, give it a task, then be sure you give it some constraints - here, crappy egocentric imaginative and prescient. Seek advice from the Provided Files table under to see what files use which strategies, and how. A extra speculative prediction is that we will see a RoPE replacement or not less than a variant. It’s considerably more efficient than other models in its class, will get great scores, and the research paper has a bunch of particulars that tells us that DeepSeek has constructed a group that deeply understands the infrastructure required to prepare ambitious fashions. The evaluation outcomes exhibit that the distilled smaller dense fashions perform exceptionally effectively on benchmarks.

댓글목록

등록된 댓글이 없습니다.